The beast has finally been unleashed! Nvidia has finally decided to launch the super-performer Geforce Titan for the consumer market and for those with deep pockets. The GK110 has 15 SMXes which is composed of a number of functional units, the GK110 also has 192FP, 32 CUDA cores, 64KB of L1 cache, 65k 32bit registers, and 16 texture units. To coincide with these specifications, the GK110 invokes 6 ROP partitions, each one having 8 ROPS, 256KB of L2 cache which is connected to a 64 bit memory controller.

Coming in at a massive 7.1 billion transistors, it takes up 551mm2 on a 28nm process from TSMC. The GK110 was originally meant to be the flagship of the 600 series but NVIDIA had other plans and held the GPU at bay and was promptly replaced with the GK104 core instead. After winning the super-computing bid for the Oak Ridge National Laboratory’s Titan supercomputer, Nvidia probably emptied their pockets of all the GK110 cores with the Tesla K20x GPU’s that they had but they are now ready to release the second device based on the GK110 (technically the third) which they call their greatest accomplishment for the consumer market.

The Geforce GTX Titan is using a restricted GK110 core will all 6 ROP partitions enabled and the full 384bit memory bus enabled, but only 14 of the 15 SMXes enabled which means the Titan will be swinging with 2688 FP32 CUDA Cores and 896 FP64 CUDA cores. Fear not, for this is actually identical to the K20x NVIDIA is currently shipping, there isn’t any GK110 currently shipping that has all 15 enabled anyways and no one really knows why. The GTX 680 was able to clock as high as 1006mhz but this is not the case for the Geforce GTX Titan, since it is a much bigger GPU it has to be downclocked to a reasonable 837mhz with a boost clock of 876mhz which isn’t that much of a boost to be quite frank. However, NVIDIA were kind enough to slap on 6GB GDDR5 ram on the GPU making it very ideal for those with multi-monitor displays, this allows it to have more bandwidth then it knows what to do with and a whole lot more shading/compute and texturing performance. Since Titan is based on a compute GPU, enthusiasts will also benefit from the extra horsepower as well without any of the limitations that prevented other GeForce GPU’s from reaching their full potential in an effort to protect NVIDIA’s Tesla line up.

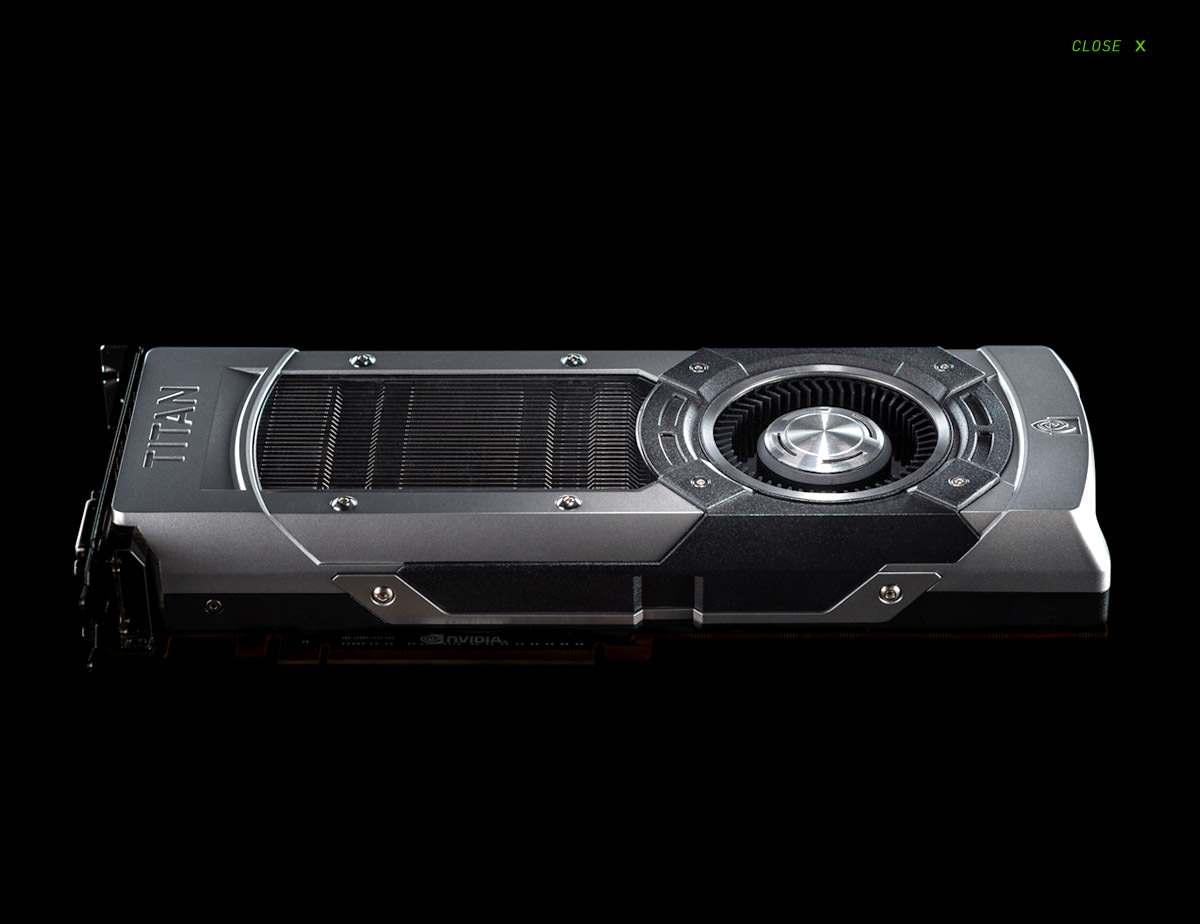

Titan makes use of the same form of cooler that it’s predecessor the GTX 690 and 590 had before it, showing off it’s luxury status there is no piece of plastic to be found on the card and NVIDIA has tried to make it as clean as possible, there is even a poly-carbonate window allowing you to see the heatsink to your heart’s content. Titan moves forward from the 4+2 power phase design that debuted on the GTX 680 and make use of a 6+2 power phase design, a 6pin and an 8pin provides power for the card which allows for a total of 300w of capacity with Titan sitting comfortably with a TDP of 250w and a subtle overhead for extreme overclockers. In addition, Titan follows suit with precedence and has two DL-DVI ports, one HDMI port, and one full-size DisplayPort.